A recent requirement that I encountered on a customer site was their requirement that the rows that appeared on a Performance Point Scorecard were ordered by the traffic light status. All rows that have a big red status appear at the top of the scorecard, followed by the amber coloured members and followed by the green lights. Now this is very easy to do once the scorecard is displayed by clicking on filter mode and sorting by a column, but this approach has a couple of issues with it. 1) You have to do this every time as you can’t set the default sort order. 2) This approach assumes that you have a column that corresponds to your the score value.

In this blog I touch upon a few areas

1) How to create dynamic rows in a scorecard. When you design a score card, the rows are static. There is a technique that can be applied to make the rows dynamic

2) How to use a Filter Link “Member UniqueName” source value twice in a single scorecard, once for the purposes of filtering the KPI values and once for the dynamic creating of rows

3) How to extent the dynamic rows concepts to include ordering.

As have been

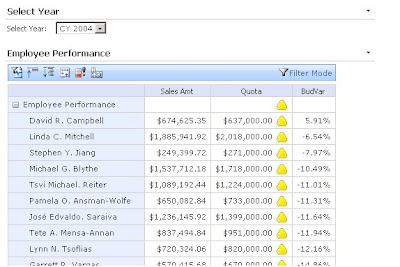

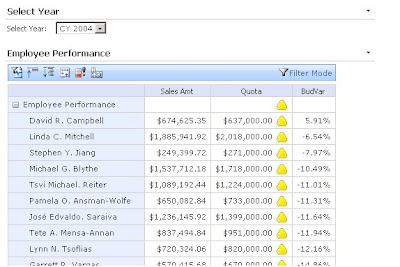

blogged about elsewhere, the designed behaviour of dimension members on a performance point scorecard is that once you put a dimension member on a row, it becomes a static row member and new members are not added (or removed) based upon any filter you select. However as indicated here, there is a way to solve this. I used this technique to list members on my performance point rows; however a small extension to this same technique allowed me to order by the indicator status as well. I have used Adventure Works as a source for my new Dashboard. The basic Dashboard looks like;

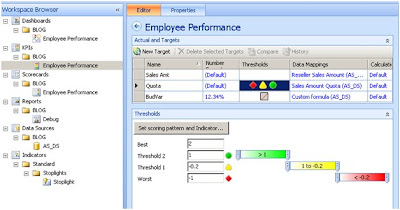

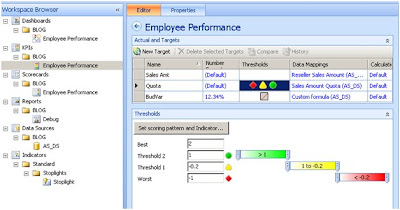

I have my indicator set on the column Quote and I have selected Band by Stated score and supplied the MDX for the score. See the MDX sample below.

([Measures].[Reseller Sales Amount] - [Measures].[Sales Amount Quota])

/

[Measures].[Sales Amount Quota]

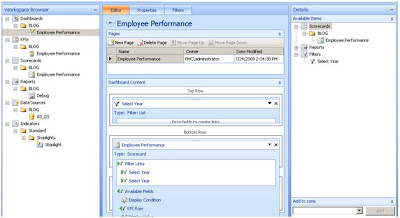

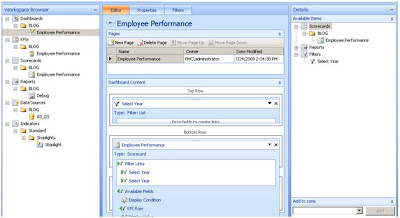

When creating the score card, I placed my KPI onto columns and then I drag the [Employees]. [Employees]. [Employees] attribute onto rows. This is just so I can render a scorecard. Next I create a Dashboard and on this dashboard I create a Filter based upon the Calendar Year. The next step is to drag both scorecard and filter on the dashboard page.

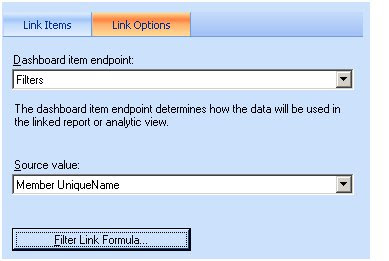

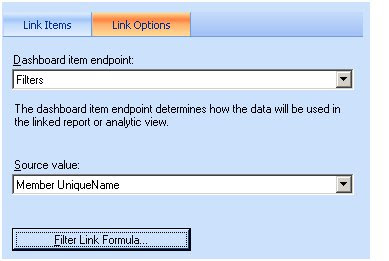

Now the fun starts to happen. You will notice that I have the select Year Filter on the Employee Performance scorecard twice. This is because I want to do two things with the filter. 1) I want to it to actual work as a filter for my KPI so that we can see an employees performance by a year. 2) I want to re-use that filter in a MDX statement that will determine what rows to display on the scorecard and how to order themThe first filter is straight forward. I connect the Top Row Filter to the Filters Dashboard Item End Point and use the Member UniqueName as the Source Value.

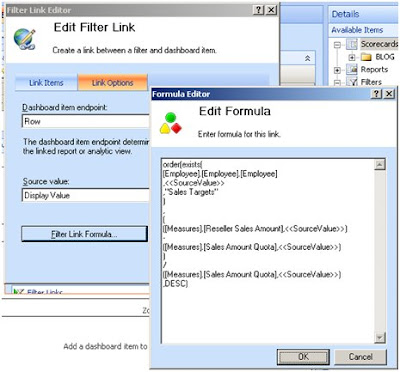

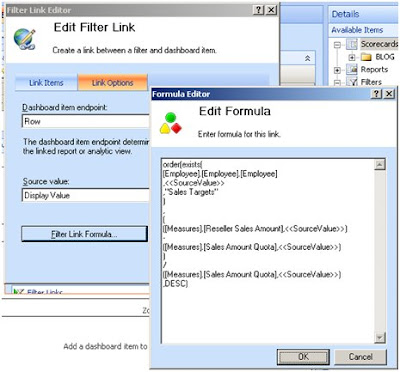

Now onto the second requirement which is to generate the rows of the scorecard based up the filter. I create a new filter link and this time I connect the Dashboard Item Endpoint to the DisplayValue. I then configure the Filter Link Formula as shown below. My MDX formula consists of an exists function wrapped inside an order function. The exists function gives me a set of employees that matches the selected year in the filter. The Order then sorts this list according to a value. The value that I am supplying is the same formula that I use to calculate the score. The sharp eyed observer will spot a problem here. I am using SourceValue when the Dashboard Item Endpoint refers to DisplayValue. Shouldn’t I refer to DisplayValue instead? I was hoping to be able to do this and everything appeared to work great, except the sort was not working. The Employees came out in the same order every time. The syntax to work with Display is strtomember('[Date].[Calendar Year].[DisplayValue]') however this did me no good. Instead, I need to use the SourceValue and corresponding Member UniqueName a second time. Unfortunately the PPS scorecard builder does not allow you to do this. To get around this you have to edit set .bsmx XML file. More on this later on.

My MDX used above.

order(exists(

[Employee].[Employee].[Employee]

,SourceValue

,"Sales Targets"

)

,

(

([Measures].[Reseller Sales Amount],SourceValue )

-

([Measures].[Sales Amount Quota],SourceValue )

)

/

([Measures].[Sales Amount Quota],SourceValue )

,DESC)

I put in the Filter Link Formula that refers to SourceValue and leave the Source Value entry on the Filter Link Editor as Display Value.

I now publish and have a look at my new scorecard.

The scorecard displays but you can see that the sort order is not working. (I have put the a BudVar column onto the scorecard to make it very obvious what the field used to generate the score is reading.)

At this stage I have to close my scorecard in the scorecard builder app. Then I browse to the windows folder that I have saved the .bswx file, take a copy and then edit the file in notepad. I found the fastest way to fine the correct section in this file was to search for order(exists( which are the first bit of MDX of my Filter Link Command.

The first bit of the highlighted section reads SourceColumnName=”DispalyValue”. Change this to SourceColumnName=”MemberUniqueName”. Save the file. Open the file again in Dashboard Designer and publish again. This time your scorecard should run as you want.

Finally! Now this solution is not ideal as you have to edit a .bsmx file which raises all sorts of questions about future maintenance of this edit. It is annoying that the system supports this functionality, but you can’t edit it directly. I would be advise caution to anyone who needs this. Is it really necessary in the solution? If it is, document the steps and put in place some procedure that future edits to the bsmx won’t forget about this edit.

Have a good day

Mark

3) Create a new report parameter (called UserRole in my case) and set its available values and default values from the dataset role. For now you might want to keep this as a visible parameters, but don’t forget to unset this prior to deployment to production.

3) Create a new report parameter (called UserRole in my case) and set its available values and default values from the dataset role. For now you might want to keep this as a visible parameters, but don’t forget to unset this prior to deployment to production.